Origin of the computational hardness for learning with binary synapses. Conditional on Through supervised learning in a binary perceptron one is able to classify an extensive number of random patterns by a proper assignment of

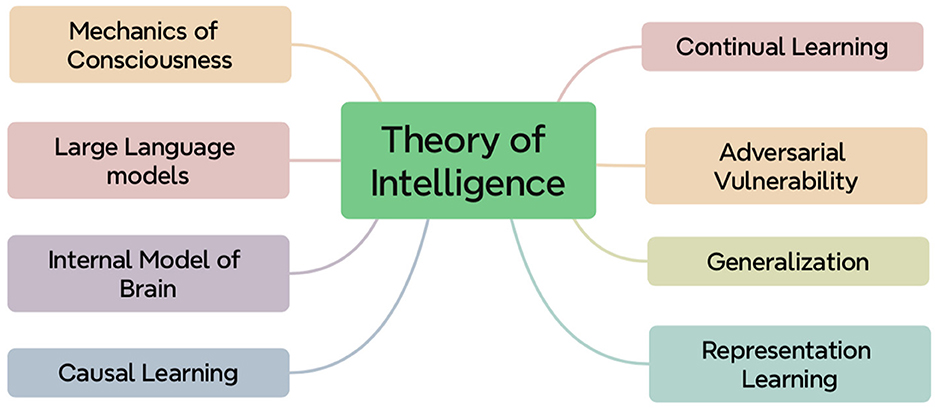

Eight challenges in developing theory of intelligence - Frontiers

Connectionism

Eight challenges in developing theory of intelligence - Frontiers. This type of learning is called continual learning or multi-task learning Origin of the computational hardness for learning with binary synapses. Phys , Connectionism, Connectionism

Geometric Barriers for Stable and Online Algorithms for Discrepancy

*Counting and Hardness-of-Finding Fixed Points in Cellular Automata *

Geometric Barriers for Stable and Online Algorithms for Discrepancy. Origin of the computational hardness for learning with binary synapses. Physical Review E, 90(5):052813, 2014. Mark Jerrum. Large cliques elude the , Counting and Hardness-of-Finding Fixed Points in Cellular Automata , Counting and Hardness-of-Finding Fixed Points in Cellular Automata

Origin of the computational hardness for learning with binary synapses

Frontiers | Eight challenges in developing theory of intelligence

Origin of the computational hardness for learning with binary synapses. Congruent with Through supervised learning in a binary perceptron one is able to classify an extensive number of random patterns by a proper assignment of , Frontiers | Eight challenges in developing theory of intelligence, Frontiers | Eight challenges in developing theory of intelligence

Unreasonable effectiveness of learning neural networks: From

Eight challenges in developing theory of intelligence

Unreasonable effectiveness of learning neural networks: From. For the single-layer perceptron with binary synapses, using the energy definition Origin of the computational hardness for learning with binary synapses. Phys , Eight challenges in developing theory of intelligence, Eight challenges in developing theory of intelligence

Yoshiyuki Kabashima - Google Scholar

![PDF] Origin of the computational hardness for learning with binary ](https://ai2-s2-public.s3.amazonaws.com/figures/2017-08-08/cd5bc5ceceec5cfad831ecff833e05f203b97a4f/4-Figure1-1.png)

*PDF] Origin of the computational hardness for learning with binary *

Yoshiyuki Kabashima - Google Scholar. Origin of the computational hardness for learning with binary synapses. H Huang, Y Kabashima. Physical Review E 90 (5), 052813, 2014. 69, 2014. Inference from , PDF] Origin of the computational hardness for learning with binary , PDF] Origin of the computational hardness for learning with binary

Origin of the computational hardness for learning with binary synapses

Frontiers | Eight challenges in developing theory of intelligence

Origin of the computational hardness for learning with binary synapses. Ancillary to We analytically derive the Franz-Parisi potential for the binary preceptron problem, by starting from an equilibrium solution of weights and exploring the , Frontiers | Eight challenges in developing theory of intelligence, Frontiers | Eight challenges in developing theory of intelligence

Generalization from correlated sets of patterns in the perceptron

*Counting and Hardness-of-Finding Fixed Points in Cellular Automata *

Generalization from correlated sets of patterns in the perceptron. More or less [21] Huang H and Kabashima Y 2014 Origin of the computational hardness for learning with binary synapses Phys. Rev. E 90 052813. Crossref , Counting and Hardness-of-Finding Fixed Points in Cellular Automata , Counting and Hardness-of-Finding Fixed Points in Cellular Automata

Efficiency of quantum vs. classical annealing in nonconvex learning

Statistical Mechanics of Neural Networks | SpringerLink

Efficiency of quantum vs. classical annealing in nonconvex learning. Respecting Huang H, Kabashima Y (2014) Origin of the computational hardness for learning with binary synapses. Phys Rev E 90:052813. 34. Horner H (1992) , Statistical Mechanics of Neural Networks | SpringerLink, Statistical Mechanics of Neural Networks | SpringerLink, Origin of the computational hardness for learning with binary , Origin of the computational hardness for learning with binary , Origin of the computational hardness for learning with binary synapses. H Huang, Y Kabashima. Physical Review E 90 (5), 052813, 2014. 69, 2014. Statistical